research

some topics of interest I have been working on

feature-based attention

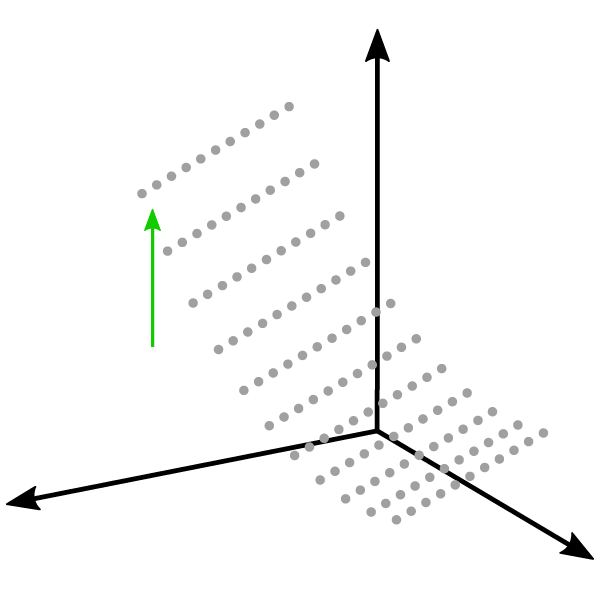

Researchers have typically divided up the study of attention into several different domains, reflecting the different types of visual information that can be present in the world. Attention can be directed towards different spatial locations, objects, features, or times, for example. Feature-based attention refers to how we attend to basic visual features, such as colors, orientations, or motion directions.

In my research, I investigated some fundamental aspects of feature-based attention, such as:

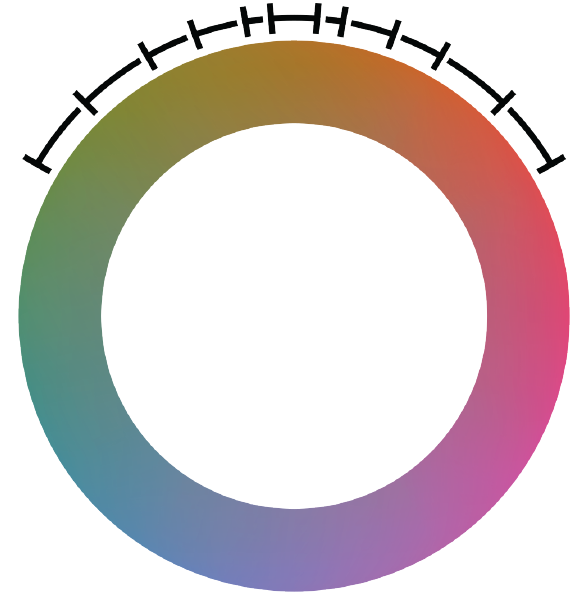

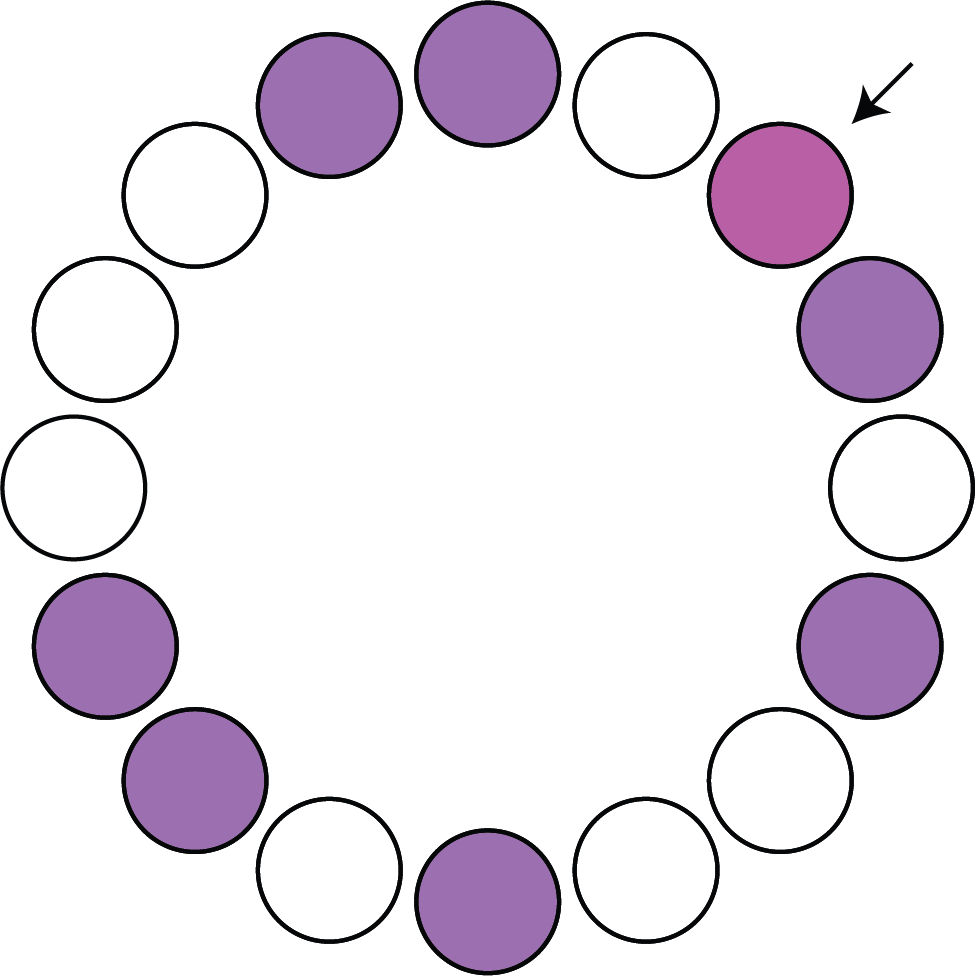

- How broadly or narrowly can we focus our attention to features? (Chapman & Störmer, 2023; Özkan et al., 2025)

- How is the efficiency of attention affected by the similarity between target and distractor features? (Chapman & Störmer, 2022; Chapman & Störmer, 2024)

- Can feature-based attention account for the findings of object-based attention? (Chapman & Störmer, 2021)

relevant papers

representational geometry and attention

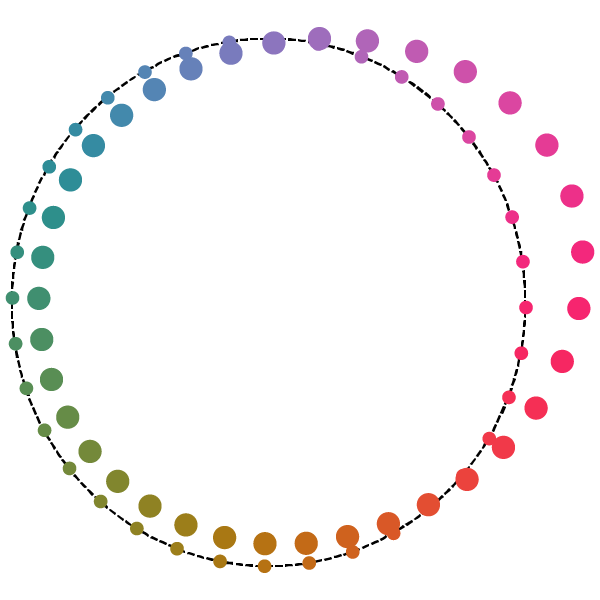

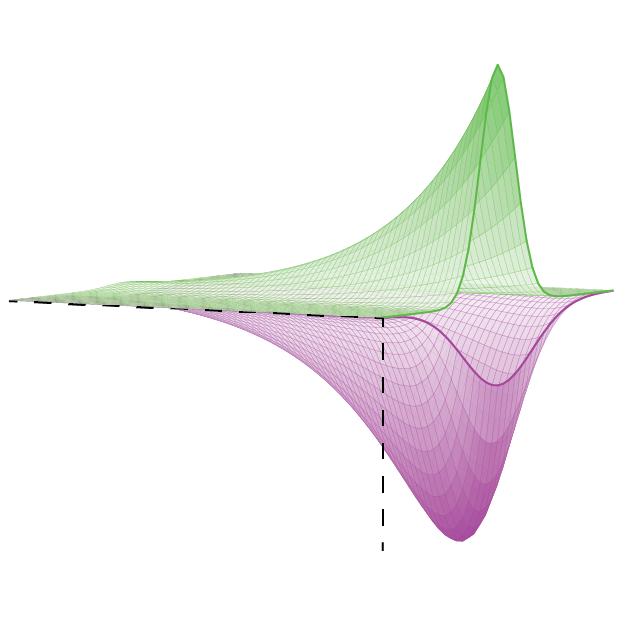

Representational geometry is a computational framework that aims to understand the structure of neural activity and how it relates to processing of different types of information. One of the key concepts in representational geometry is similarity—the idea being that things in the real world that we perceive to be similar are also likely to generate similar patterns of activity in the brain.

Recently, I have been interested in exploring how representational geometry can account for different experimental findings related to attention. In one line of research, I found that when attending to particular colors, people perceive other colors as being less similar to the target, suggesting that their perception was biased by attention (Chapman et al., 2023). Extending on this, in my postdoc I have completed a project where we measured how attention affects the representational geometry of orientation (Chapman et al., 2025).

Reviewing a wide range of attention literature, we have also argues that many findings, spanning different domains of attention (spatial, feature-based, etc.), can potentially be unfied within this framework of representational geometry (Chapman & Störmer, 2024).

relevant papers

- Review

Representational spaces as a unifying framework for attentionTrends in Cognitive Sciences, 2024

Representational spaces as a unifying framework for attentionTrends in Cognitive Sciences, 2024

computational modeling

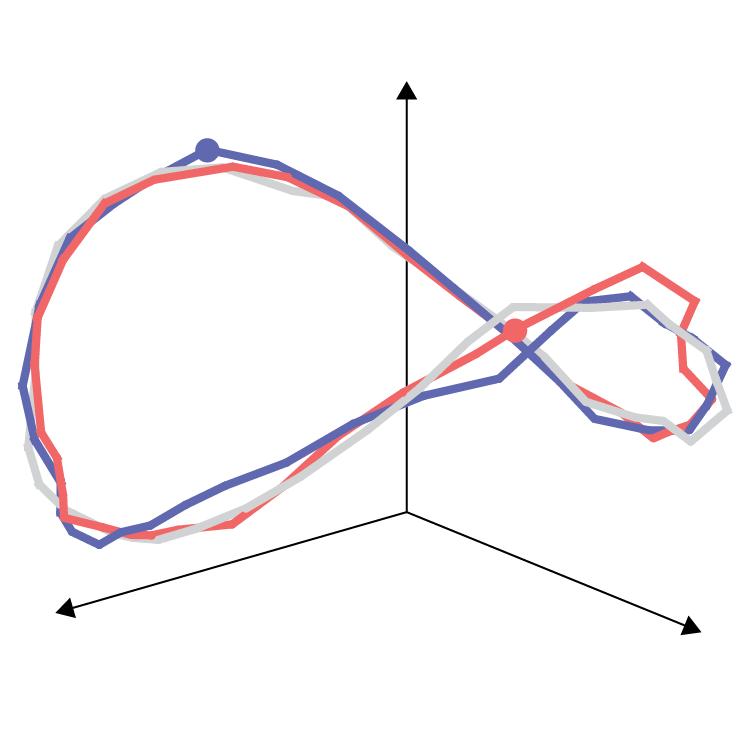

In my postdoc, I have been working to develop computational models of visual processing that take into account the temporal dynamics of the brain. We have recently submitted a manuscript for publication where we tested a new model, the Dynamic Spatiotemporal Attention and Normalization model (D-STAN), and measured its ability to reproduce several known non-linear temporal response properties (Chapman & Denison, 2025).

In ongoing work, I am assessing how trade-offs in attention across time might be accounted for by the dynamics of normalization in D-STAN (Chapman & Denison, 2024).

Going forward, I plan to incorporate computational modeling as a core aspect in my research. I am particularly excited by the idea of modeling the effects of attention in neural populations, and seeing how these changes are related to the perceptual and neural representation of different stimuli.